Selling complex products and services can be a time-consuming process. But with smart use of generative AI, businesses can reduce the time spent on general requirements dramatically, allowing them to focus on areas which will make their offer stand out.

Companies offering large, complex products and services know that there are often many stakeholders involved in the buying process, each with their own requirements.

Those requirements are often similar across clients, especially from those within the same industry, making the process of responding both tedious and protracted.

If the work is distributed amongst a number of sales engineers or representatives, the quality of responses can vary, resulting in a less professional impression.

Use Generative AI

Columbus has developed a generative AI solution to reduce the repetitive routine work and errors associated with answering RFQs (requests for quotations). It uses previous replies to RFQs as a knowledge database and draws on that to suggest responses to new RFQ questions.

The model creates response suggestions with links to the relevant previous data it has used in the process, allowing the user to fact-check each of its recommendations.

Challenge: Data completeness and precision

For this solution to work, there must be a sufficient overlap of customer requirements, enabling the model to use prior responses to author new suggestions. Additionally, each requirement-response pair should be unambiguous and precise enough for the model to derive accurate conclusions and apply them to new inquiries.

It is possible to use your existing RFQ data to understand how it can support future RFQs by simulating the use of one set of RFQs on another set. You can simulate both the precision and the completeness of the data.

Assess Precision of Past RFQ Responses

To verify the precision of each requirement in isolation, perform a simulation which reformulates each requirement slightly without changing the meaning. Use other words and construct the sentences differently. Then let your LLM answer the requirements in your new version using the original requirements and answers. The percentage of requirements it can answer is the precision.

With a low percentage, the requirements and the responses are too vague to provide the knowledge needed to answer new similar questions, and you may choose to omit this data set from your model. With a high percentage, the quality is robust, and it should be included.

Assess completeness of past RFQ responses

The following approach can be used to simulate the data completeness:

Take one of the existing RFQ responses in your data and examine how much of that RFQ response could be answered based on knowledge from the other RFQ responses as reference data.

Do the same with different RFQ responses as the target and others as reference data to obtain the mean and variability in percentages. With a high mean and low variability, your model will be able to consistently provide automated responses to new RFQs, assuming new clients have similar requirements to your past clients.

With low percentages and low variability, there is not enough overlap between client requirements to rely on knowledge from past RFQ responses. With high variability, it is unclear, unless you can find a way to segment the RFQ responses in an appropriate way with lower variability in the segments.

Let’s look at a couple of examples of completeness assessment:

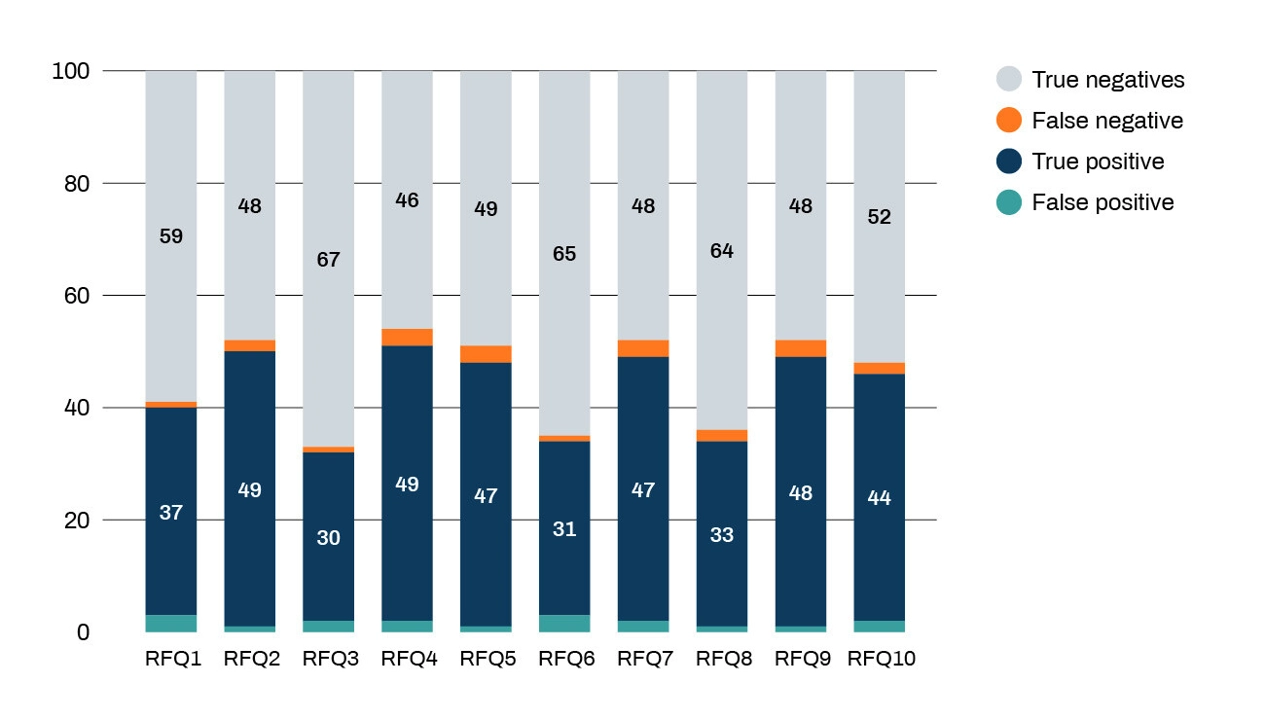

Example 1: Low completeness

What conclusions can we draw here? There are a few cases, 1-3%, where the model made a mistake and gave the wrong answers, so-called hallucinations. There are a few cases, 1-3%, where it missed an answer.

The number of responses where it could find data in the remaining RFQs span from 30% to 49%. This means that the solution would consistently produce 30% of the work to respond to requirements for an efficiency increase of 3 times. This should be compared against the effort to build the model and train the sales engineers on the model.

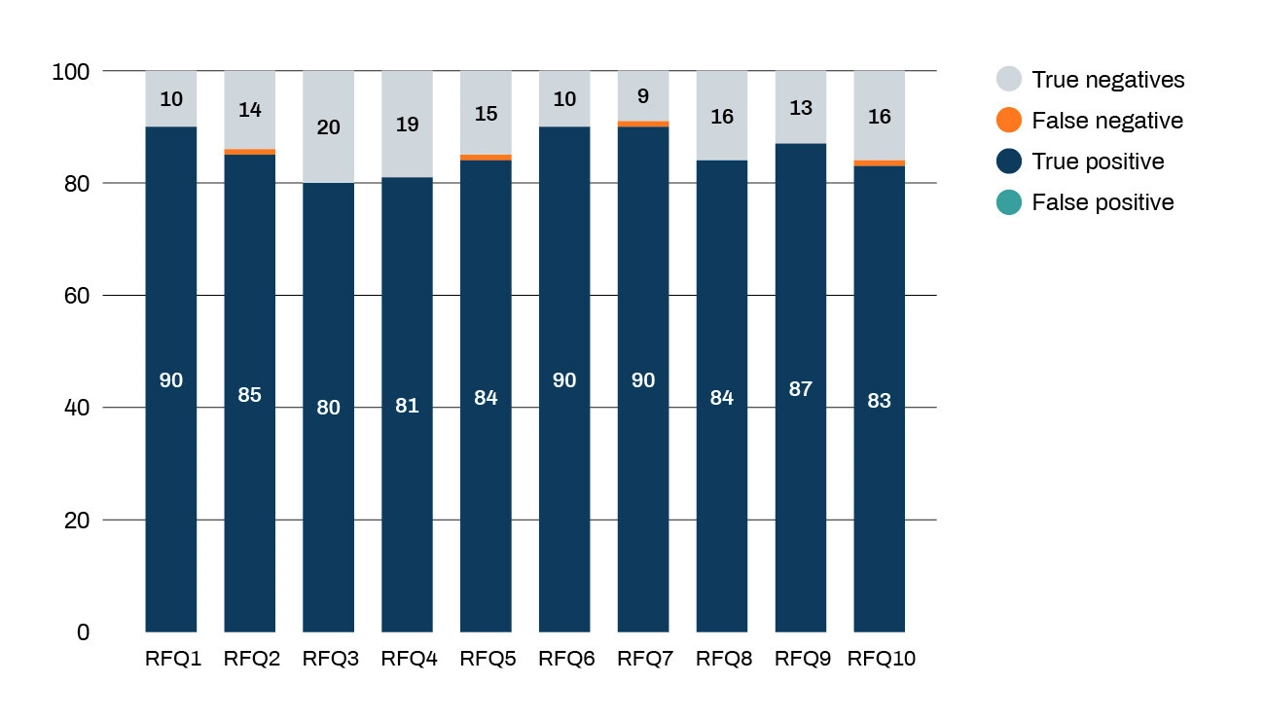

Example 2: High completeness

Now contrast example 1 with the results below:

In this case, there are no hallucinations, the overlap of requirements between RFQs is 80-90%. This means that the solution would consistently produce 90% of the work to respond to requirements for an efficiency increase of 10 times.

Enough value to continue?

Is the model used in example 1 and 2 valuable enough to implement? Maybe. It would depend on the effort needed to review and identify wrong answers from the model, the impact a wrong answer would have, and the effort needed to complete the answers manually. It also depends on what percentage of completeness is valuable for you in your process. For example, is writing the RFQ less work if it is 50% complete and you just need to do the review and write the other half?

Would you like to know more?

If you need assistance to get started or investigate the quality of your data and determine the value of an AI solution in helping with your RFQ responses, please contact Anders.Leander@columbusglobal.com or your Columbus advisor.